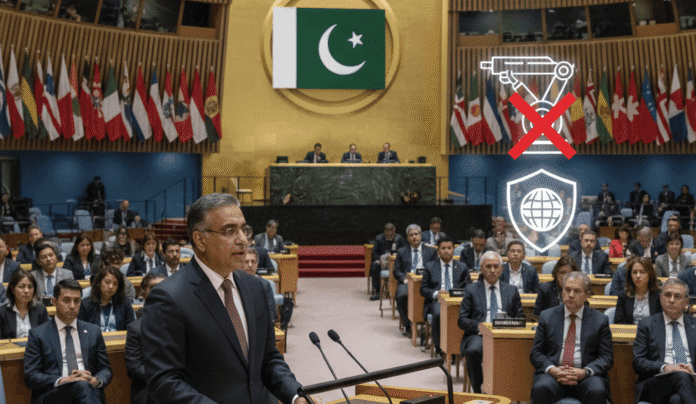

Pakistan’s Clear Warning at the United Nations

At the United Nations, Pakistan issued a strong message about the dangers of using Artificial Intelligence in warfare. The statement focused on one main point: “No Human, No AI Weapons.” In simple terms, Pakistan warned the world that machines should never be allowed to make life-and-death decisions without human oversight.

This marks a bold stance in the global debate on autonomous weapons. As AI becomes more advanced, so do the risks of “killer robots” that can identify and attack targets without human control. Pakistan’s call for a Pakistan AI ban in military use highlights the country’s growing role in shaping international discussions on technology and security.

Why Pakistan Raised the Alarm

Pakistan’s position on AI in warfare is guided by three concerns:

- Accountability: If AI-controlled weapons make mistakes, there will be no human responsibility.

- Security Risks: An AI arms race could destabilize global peace, creating unpredictable conflicts.

- Humanitarian Issues: Machines cannot distinguish civilians from combatants with the moral judgment that humans apply.

Pakistan stressed that while AI has many positive uses in healthcare, agriculture, and education, its use in warfare is too dangerous without rules.

The Global Debate on AI Weapons

The world is divided on the issue. Some powerful nations are investing heavily in AI military programs, claiming these systems improve speed and precision. But others, including Pakistan, argue that such weapons are a threat to humanity itself.

The United Nations has been holding discussions on this issue, but so far, no binding treaty exists.

Pakistan’s Proposal at the UN

In its official statement, Pakistan urged the world to adopt a three-part approach:

- Ban fully autonomous weapons that operate without human control.

- Introduce a global treaty ensuring human oversight in all AI-based military systems.

- Promote cooperation among nations to share knowledge and prevent misuse of AI.

This approach is not just about technology; it is about ethics, accountability, and global peace.

Real World Examples Raise Concerns

Reports suggest that AI-driven drones and automated defense systems have already been used in recent conflicts. This shows the urgency of regulating AI in warfare.

Without strong laws, battlefields could soon be dominated by machines that act on their own. Pakistan’s call for a Pakistan AI ban in military use is a warning to stop this future before it becomes reality.

Internal and External Balance

Pakistan continues to promote AI development for peaceful purposes. Local initiatives encourage AI in education, business, and governance.

At the same time, Pakistan insists that AI weapons without human oversight must be stopped. This balanced approach shows that the country is not against AI, but against its misuse.

Why This Message Matters

The slogan “No Human, No AI Weapons” captures Pakistan’s perspective in simple words. It is a reminder that while AI can be useful, it cannot replace human morality in war.

The demand for a Pakistan AI weapons ban is not only about security but also about preserving human dignity. In the age of rapid technological growth, these principles must guide global policies.

Conclusion

The rise of AI is inevitable, but its military use must be controlled. Pakistan’s strong stand at the UN delivers a clear message to the world: machines should never decide who lives and who dies.

With calls for a Pakistan AI ban in autonomous weapons, Pakistan joins international voices demanding responsibility, safety, and peace.

If global leaders listen, humanity can shape AI for progress rather than destruction. If ignored, the future may be dominated by weapons without conscience a risk too great to take.